The Hard Problem

The long awaited and probably excessively over written part 3 of my series on AI

Making a series of articles with either more or less parts than three was never an option, so here is the final part…for what it’s worth.

Actually, I can't imagine there will ever be another post you'll find here on Securiosity that does not at some point mention hyper functional technologies or the ways in which people perceive or use them, so the idea that this post represents the third and final part of a series is just me trying to convince myself that I've resolved something, like Tolkien (who didn't write three books), The Matrix (four movies), Toy Story (at least four movies)…or something like that.

Hey look. I'm just blurting this stuff out (warts and all) in the same way that people do. Some of it might sound weird and unconnected, and some of it even sounds wrong. A bit like a ChatGPT response…

The fact that I write stuff that doesn't make sense and ChatBots write stuff that doesn't make sense does not make us the same. Similarly, the fact that a ChatBot can write stuff that is infallibly correct to (figuratively speaking) a lot more decimal places than I can does not make it more intelligent than me.

A does not equal B.

You need to accept that there is - in fact - a spoon.

Alan Turing was clearly a very smart guy, but the test that bears his name is patently dumb.

Of course, there’s no way anyone anywhen is going to consider me as having anything even slightly comparable to the intellect of Mr Turing, but I sometimes wonder about these megaminded individuals and their scope for fallibility. Newton (of whom I remain a massive fan) was a staunch believer in alchemy, for instance. I suppose that once the romance of an idea implants itself in your head it's hard to dislodge the prettiest but stupidest ones, even when you're smart.

Turing lived in a different era. There are many things about him and his life that would have been so unrecognizably different had he been born half a century or so later. I wonder what that might have resulted in.

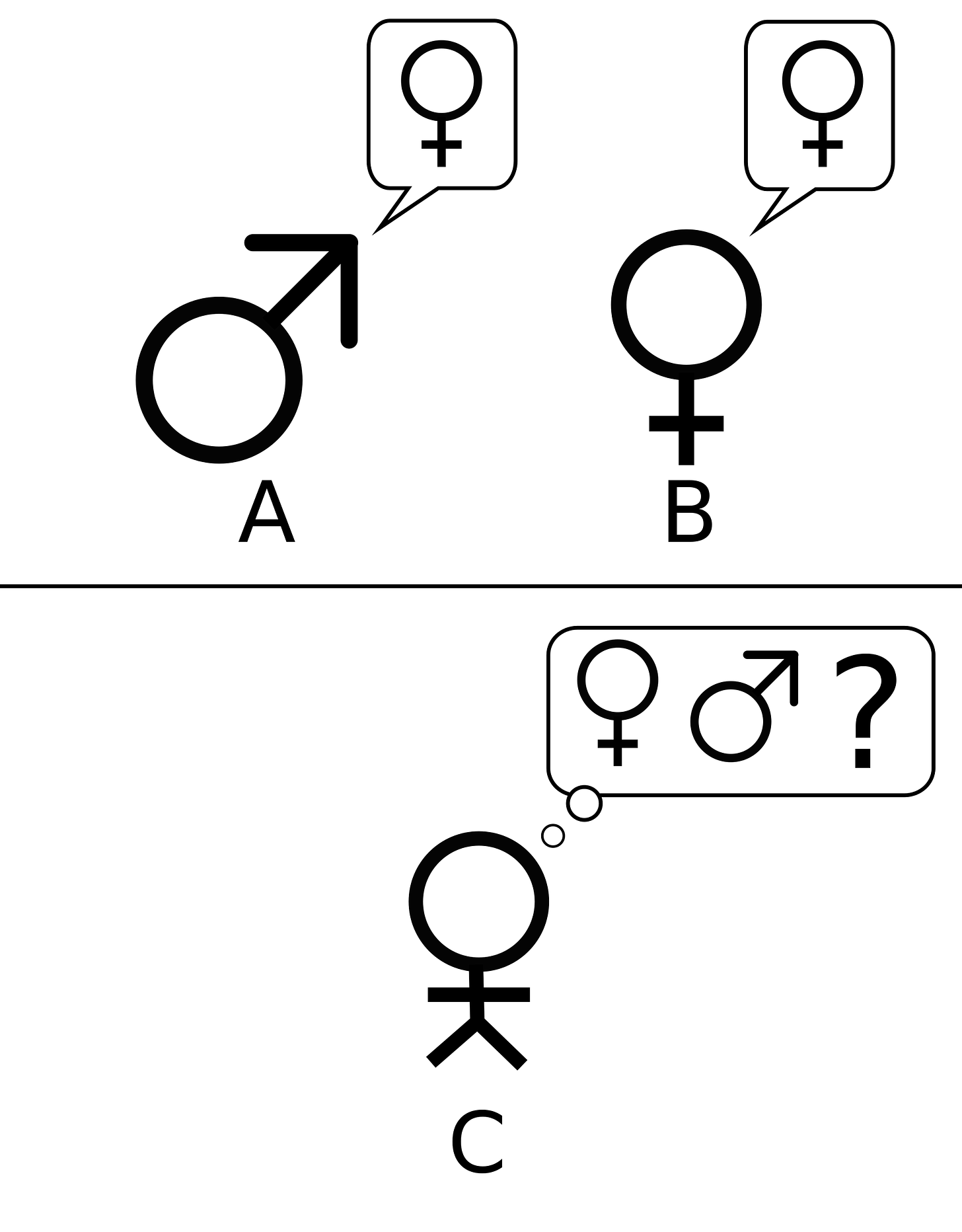

We will never know, but in the meantime let’s look at the Turing Test in its original form and see what it says. I'm borrowing some graphics from Wikipedia as illustration. I hope they don’t mind.

The original idea was presented in the form of a party game, as described by Alan Turing in "Computing Machinery and Intelligence". Using nothing but a series of written questions, person C attempts to determine which of the other two players A or B is a man, and which of the two is a woman. Player A, the man, tries to trick player C into making the wrong decision, while player B tries to assist player C in answering the puzzle correctly.

Who knows what might have happened if Alan had proposed such a test today - cancellation, perhaps - but you get the idea.

Mr Turing then asked : "What will happen when a machine takes the part of A in this game? Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman?"

The whole premise sounds a bit obscure to the modern ear, but the point of the exercise was to ponder whether or not a computer might be able to convincingly imitate a human being, and by so doing, demonstrate (artificially) intelligence.

As has become obvious in the recent past, it's perfectly possible to get a machine to give you text that is humanesque. I have not seen any mention of how well ChatGPT performs at gender spoofing, but I guess people just don't want to get themselves canceled.

Hook up the output from your ChatBot to a speech synthesizer and you're going to get fairly human sounding humanesque words (depending on the context and the astuteness of the listener).

Add a deep fake face and now the thing looks like a duck and quacks like a duck…

But it sure ain't no duck.

None of these enhancements to the ChatBot baseline equate to increases in the intelligence of the underlying ChatBots, they simply add sensory dimensions to the original note passing scheme, which is clearly a legitimate thing to do if you want to truly be convinced that subject A or B is what they say they are in their notes.

If we're striving for human level intelligence - and hence are measuring what that is against humans - then surely the subject needs to be able to be convincingly human, and in order to straddle the uncanny valley, that means we need to be convinced that they are a real person.

Make a nice sound and reflect light off your surface in the appropriate way, add some smell and tactility to the subject and you’d be getting close. They're just more ways of adding authenticity to the simulated human with things that we actual humans know about about what makes a person a human being.

Why not get a stethoscope and listen to the heart and breath? Why not take an echo cardiogram? Conduct a CT scan to check out the quality of the imitation innards?

Perhaps ultimately (for now) we might perform a functional MRI scan of the subject and compare what we see with a number of authenticated test subjects.

These are all just extensions of the Turing Test, and in all cases - except the one with the text based notes - computers fail. Catastrophically.

Why does this matter?

What has making a simulated human got to do with making artificial super intelligence?

There is a school of thought that claims we are mere moments away from human level intelligence in computers, and that (by implication) this level of intelligence must be substrate independent, because there aren't any meat-based computers right now.

Many of these people are also of the view that consciousness is simply a matter of information processing - and therefore also substrate independent - and will simply show up once the substrate gets complex enough.

I'm not sure how many PetaFlops of performance or Terabytes of memory we might have to get to before we get to the right amount of complexity, by the way. Nobody has any idea, but that seems weird, because I'm quite sure that most human brains can't even manage one Flop per second and we're mostly all as conscious as hell.

Consciousness and intelligence are not the same thing. If you use Turing’s test to gauge whether something is intelligent or not then this is clearly absolutely no gauge of whether player A or player B (or player C) is conscious.

We’ve had hyper-functional systems built on silicon for a very long time. These are systems that outperform people by many orders of magnitude when performing tasks that humans are slow at, i.e. number crunching.

Signal processing, image manipulation, encryption - they’re obviously all number crunching - but also chess and Go! and driving a car with the aid of nothing but a few sensors. These functions are also number crunching when a computer is doing them.

However, these functions are not number crunching when people do them.

Despite all the advances in neuroscience, nobody has yet discovered the Arithmetic and Logic Unit in the brain where number crunching makes our kidneys work, but something up there has to be doing that math, because if it stops, the chemical imbalances in our blood will make us dead pretty darned quick.

The computer beating your pants off every time you play chess against it does not know that it is playing chess. It does not even know what physical reality is, so the spatial relationships between a bunch of horses and castles on a two dimensional grid, and the ways in which they can move do not exist within their data sets. All is simply numerical representation. A mesh of logical connections and expressions that can be used to make billions of transformations per second on the data, resulting in weighted scores of probability that can be compared against one another (numerically) to find the best route to optimal score (victory) at each point in the game.

I couldn’t even beat the pocket chess computer I owned in the mid 1980s set to ‘beginner’ mode, but I never needed to worry about the existential threat of AI while I drifted off to sleep on a long train journey.

One of the thought experiments AI advocates frequently use to justify the idea of computers taking over from people goes like this :

Imagine that we build a machine that can take apart your brain atom by atom and create a digital simulacrum of each of these atoms in a computer, replicating the precise state of each atom as it goes until it had made a copy of your brain inside its circuits and systems.

Some people claim that this digital copy must be an exact copy of you, your thoughts, memories and (indeed) your consciousness, because it is an exact copy of your atoms.

To me, this claim is clearly and absurdly untrue.

Even if we could create the most accurate atomic simulation possible, all the way down to the fundamental particle level, and could demonstrate that this atomic model behaved in every conceivable manner (digitally) the way an atom in the real world behaved, it is still not an atom.

As of now, we do not know how atoms work. If we were to model one accurately you would need a chunk of computer. We also do not really know how brains work, but we know really well how computers work - because we made them.

We know more than we used to about atoms, but in just the same way as we’ve happily (and successfully) used Newtons Laws for several hundred years to do all manner of things, only to have Einstein come along and point out the holes in those laws, there are so many unknowns, undecided and downright disputed things about the sub-atomic realm that it is clear we will have more Newton/Einstein style paradoxes to deal with in the future.

Our models are still just models.

Madame Tussauds is also filled with lifelike models, but none of them are an existential threat.

In my view we know even less about the human brain than we do about the atoms from which it is made.

In the year 2000, a paediatrician living in Wales was driven from her home by a self-styled vigilante group who collectively targeted her because they believed she was a pedalphile.

Clearly this was the result of a misunderstanding, a lack of verification and probably a fair bit of group-think-driven hysteria. You could say that these people demonstrated a lack of intelligence, but in fact these were a group of human beings with brains operating on the same technologies as yours and mine (unless you’re a bot skimming my content).

They presumably all got out of bed that morning, made a cup of tea, dropped the kids to school and maybe even went to work and did their jobs. They did all of these tasks plus many more besides using the few pounds of meat squashed in between their ears with no add-ons, upgrades or ancillary components.

That was nearly 25 years ago, but even with that intervening time and all of the hype surrounding developments in machine intelligence, the human race still has not come up with a computer anywhere on this planet - not even in a top-secret research laboratory - that could perform any one of those tasks within the same context, reliably and safely, even with a human supervisor.

However, every computer on the planet could be programmed to compare the words “pedaphile” and “paediatrician” and determine that they are not the same, and any computer with suitable access to the internet (or a stored copy of an English language dictionary) could look up the definitions of those two words and see that they are very clearly entirely different. And come to those conclusions very, very quickly.

A machine capable of acting as stupidly as the rampaging mob of humans mentioned above would need to be exhibiting what is known as Human Level Intelligence - or to put it another way, it would need to be a machine capable of thinking and acting in a manner similar to a human being. No machines currently do that.

Artificial General Intelligence is something a bit like that. It's an AI that can carry out Intelligent tasks in any context without prior knowledge of the task to be performed.

When you get up in the morning, you have a vague idea of what you'll be doing today but you also possess the ability to do whatever comes up based on circumstances. Make a cup of tea, read the newspaper, drop the kids to school, do a job, inappropriately harass a paediatrician…

You do these things because of your brain-body integrated system.

I can imagine that if (for whatever reason) you happened to be a brain in a jar, you could be very intelligent and think of some cool stuff, but the kids are not going to school and very few paediatricians are likely to be chased from their homes.

Your inability to chase paediatricians is inevitably going to keep you focused on other less chasey things. You may still be very biased in your thinking but your abilities to act on those things will draw your behaviour in a different direction.

See the difference here between how I'm describing human level intelligence and AGI?

One is a hyper functional machine that bubbles away with a fluorescent light behind it (like a clever lava lamp), coming up with crazy sounding shit that I can't even understand, and the other is a muthafreakin killer robot that thinks stupid crap before I've even realised that I turned it on, and is rampaging through the streets fixing things that I never knew were a problem (like believing that people whose jobs start with a P are all a threat to the future of the universe and should be eliminated).

Neither is human. Neither is conscious. Both are hyper functional in comparison to human beings but cannot reproduce if trade embargoes prevent chips being made available to all on the global market.

There are a lot of hyper-functional machines, even today.

As I described in the previous episodes, we know from experience that there are people in the world who can paint or draw photorealistic images of people with enough detail to fool another human into believing that they are photographs. There are machines that can also do that, but way quicker. They are lava lamps.

There are people who can do amazing impersonations of other people's voices. There are machines also that can do that, but even better, and they're not limited to a few individuals, they can mimick anyone. Lava lamps.

There are a lot of machines that are designed to do things a human can do but that do it quicker or more easily. A power drill, for instance.

It does not mean they are going to take over the planet, and it very much does not mean that we should consider them to be human in the way Turing thought about it.

Only a few short years ago, we considered the octopus as just a wibbly-wobbly mollusc, good for calamari and sea-monster sci-fi. Turns out that they have about the same number of neurons as a dog, but instead of having one brain, octopi have theirs distributed as sub-brains situated in the legs communicating with a master brain in the middle.

We do not have this hive-mind sounding distributed compute network, but we do have neurons running all over our bodies connecting the thinking-meat at the top with the doing-meat everywhere else.

Some of our doing-meat does what it does without us even thinking about it. IT folk might choose to say that our brain has an operating system (or maybe even a BIOS) that looks after all of the menial stuff (oxygen stuff, nutrient stuff and toxin stuff), and the conscious thoughts are like apps running on top. Our bodies are just peripherals and our nervous system is a tangle of USB cords. But this comparison comes about because we have computers to compare with and because we’re great at anthropomorphism.

What the human body is actually like is (wait for it)…a human body!

We’re made of meat. There are some distinct functional components (an arm, for instance) that does a job (nose picking, head scratching etc), and there are components that we think do a distinct job (a kidney, say) that clearly exhibits a particular behaviour in response to hormones and invisible brain stuff. But we don’t really know that this is the whole story.

Look at an eye, for instance. It's not inside the brain but its development (or lack thereof) post partum influences the way structures relating to visual perception grow within the brain.

The stuff between our ears is way more complicated than any computer we've built up to now or about to build any time soon.

We see science people talk about chunks of the brain and what they’re for as if this is fact - but actually it isn’t. The invention of functional MRI scanning has indeed enabled brain scientists to make incredible advances in the study of what’s going on in your head, and sometimes their studies have identified what the primary job is for some sections of your think-box, but brains are complex things.

In order to make any progress whatsoever when looking at activity using F-MRI, you need to focus on small areas of activity and ignore a bunch of the background noise to prevent yourself being overwhelmed with information.

So, although studies have demonstrated (for instance) that the hypothalamus is responsible for regulating such things as anger and sexual desire, multiple other areas of the limbic system light up to some extent when you stimulate the subject to exhibit those emotions. The brain is not structured like a computer with individual components doing individual jobs. Everything you experience (both consciously and subconsciously) involves the whole brain and can involve chunks of the nervous system too. We do not have a clue how much what goes on in our heads is really influenced by the whole body, and (let's throw in something controversial but as yet not impossible) the whole universe (I'm going to come back to this in another episode when we get to quantum stuff that doesn't involve Paul Rudd).

There are countless instances where people have suffered damage to their brains as a result of injuries, illnesses or birth defects, only for the brain to retask and reconfigure, finding ways to cope with what functionality there is remaining.

There are also plenty of instances of people who suffer brain damage and are left in a vegetative state, where the base functions of the brain survive and are able to keep the operating system working (breath breathing, blood pumping, poop pooping), while consciousness and any state of self get snuffed out altogether.

We have no clue how either set of circumstances really happen. We've got a vague idea at best.

These are not things that can happen with a computer. We know this for an absolute fact.

If we pour a few chemicals (good ones, bad ones or psychedelic ones) into our bloodstream, all sorts of interesting things can start to happen.

Mood, pain, consciousness. All of these things and more can be altered, along with the operating system stuff that works at the chemical level that we aren't even aware of.

Even without mind altering hallucinogens we do things like dreaming and imagining. Imagine the computer equivalent. What's that? A stack overflow?

We go off track in the brain and we get beauty and horror.

Computers goes off track and they're broken.

Pour just about anything into a computer and the darned thing is toast.

Maybe I'm being too limited in my conceptualisation of what computers do (I don't think so, but let's just open up the possibility). What might be the equivalent of a computer hallucinogen? Cosmic rays? Some other exotic form of electromagnetic radiation?

The computers of today (and those of the tomorrow that we are currently able to accurately predict) are not made of meat. Their operation is based on binary states of information and how these states can be used to represent other things - many of which do not exist in their native form as binary objects.

Quantum computing at the qubit level isn't binary, but all of the supporting infrastructure around those core elements remains conventionally digital, and the tools that are emerging with which people will attempt to harness the capabilities of quantum processing look awfully similar to the same sorts of tools we've used for a long time to make transistors do what transistors do.

This means that everything we do in a computer is still a model. Models are by definition approximate representations of something else. Sometimes they are very accurate, but those are models of things we understand sufficiently well to be able to make that judgement.

In this series, I’ve presented information on how computers fundamentally achieve the basic functions they're able to, and how - by hyper scaling the basic functions - these computers become capable of exhibiting hyper functional behavior.

I also presented some examples of how some people are capable of performing amazing functions that appear to the rest of us as talent. These functions would take the rest of us forever, because each tiny step is hard and most of us don't even know where to start in achieving the outcome. Machines that have been built that of mimic those fundamental functions and utilize their hyper functionality to achieve in moments what we untalented humans could only achieve in centuries - at normal functional pace rather than hyper functional pace.

If the Turing test were to require that a person compare a computer generated picture of an artificial person with a real image of a real person and decide which were real, computers have been passing that test for decades, and if you were to buy a big enough computer you could do the same with video now in real time. But it's not a person, it's not Intelligent and it's not going to take over any planets any time soon - unless we meat based people hand over the keys to the kingdom because we're too dumb or deluded to realise that it's not real.

We cannot assume that a man is a man or a woman is a woman because they pass us a note that presents a convincing justification. We should not assume that a computer is the equivalent to a human on the same basis.

Not on the basis of text, speech, video, stethoscope, ECG, CT, F-MRI or even electron microscopy.

Humanity is not substrate independent. Functionality is.

Previous installments of the series can be found at :

Artificial Intelligence v Actual Stupidity

This is not a topic I particularly wanted to discuss like this, but events have made it necessary - and now that I have got to put pen to paper on the subject, I find that it’s impossible for me to get quickly to the nub of the matter without meandering my way through a number of side bars, background pieces and allegorical illustrations first.

AI Ain't Free of Artificial Ingredients

For your second visit to my AI buffet I’ve dished up an array of tidbits that demonstrate how exponential growth in transistor density has led the world to believe in magic, but don’t be deceived by what you find on your plate. It’s delicious but it’s not necessarily nutritious. If you missed out on the first course, pick up a spoon and head over to

What began as a series has turned into a bit of an obsession, and it’s likely that this topic is going to appear both regularly and frequently in future posts. However, that’s not the only topic of interest, and so for the next while I am going to look at a few other areas - particularly in relation to modes and methodologies of risk management and how we in this industry tend to do it.

Keep ‘em peeled, and join in on the conversation.